This is an after the fact progress update on the Mother May I project that I did with Kate Vrijmoet a couple weeks ago at the BWAC space in Brooklyn, NYC for their Wide Open 3 National Juried Art Show.

Once we got to the space, it seemed that the piece wasn’t really understood, and we were kind of talking in loops. I set the projector up so that we could see exactly how much space the projection would be taking up on the floor.

This helped, but ended up with us moving the pile of stuff over to a somewhat enclosed area between two spaces. Good, because we could control the lighting and audio in the space pretty well, but potentially not great because there might not be enough people going through the space. It also wasn’t an exact fit for the shape we were planning on, so we were going for it, but thinking that the shapes projected on the floor would be changed out. By the end of the 1st day of setup we had the projector set up and mounted in the ceiling. Ready to finish the physical setup with the mirror bouncing the projector’s light in the morning, and to get going on tweaking the software to fit the space.

When I got back the next morning, everything was on the floor. At first I misunderstood, and thought that James, the person next to us, had just taken everything down because he liked the space for his projection. Thankfully, that was not the case, it was Kate still wanting a better space, along with James needing a space for his projection, and (I believe) the people volunteering at BWAC trying to help us find a space that would work. The end result was that we didn’t get much more than the physical setup done on the 2nd day, but that at least included finding a mirror in the cavernous nearby IKEA.

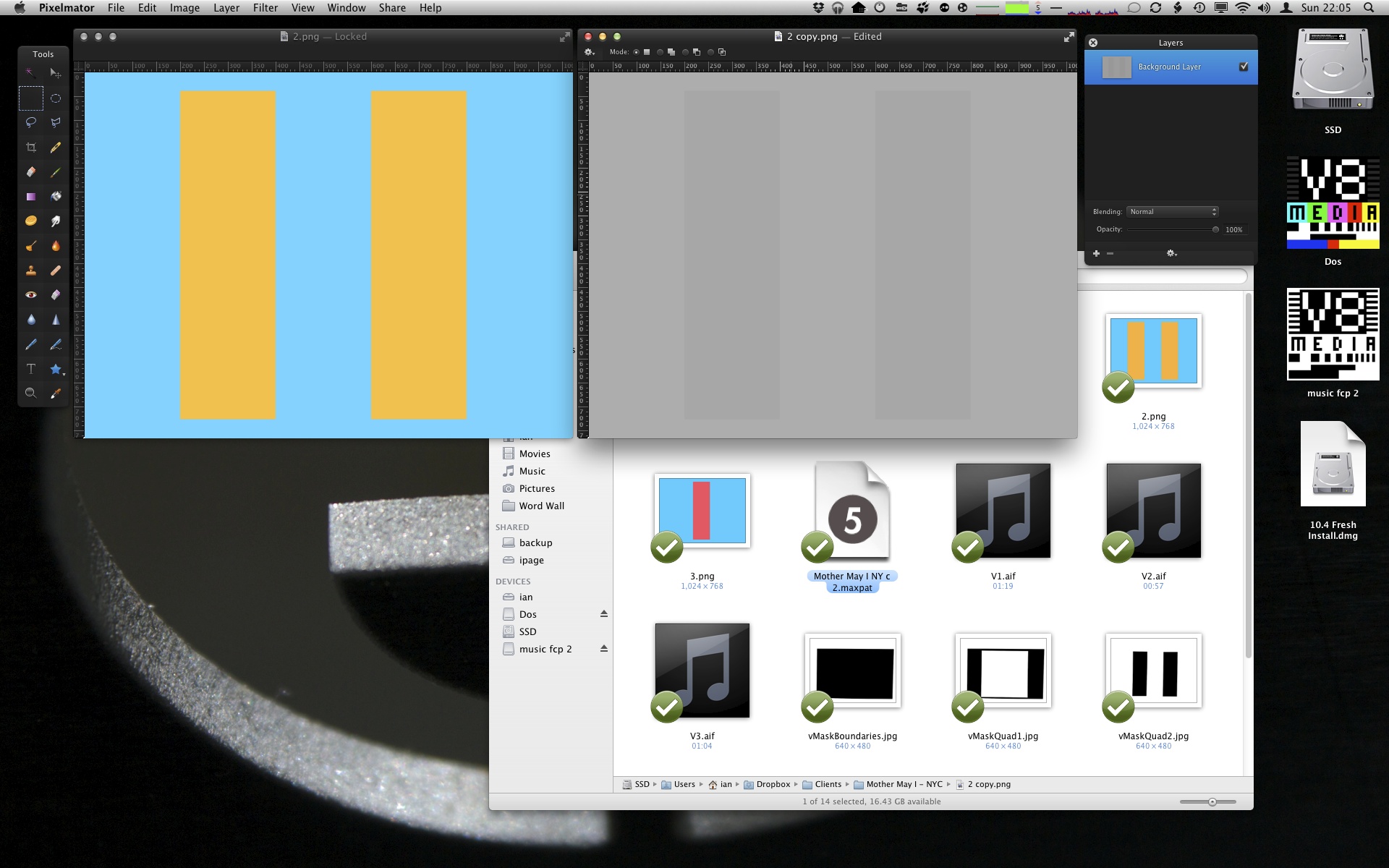

We were going in fairly blind as to what kind of space we would be installing Mother May I into. From photos of the BWAC space it looked like a bunch of beams, columns, and walls in a fairly well lit space. The first version of MMI used a paint outline on the floor to designate the area of interaction, but we didn’t think we were going to have that option, so we decided to go with a projector. Along with being able to outline a space with light, this gave us a bit of “feature creep” as we were now enabled to change the lighting through the use of the projector. Add the motion detection that triggers the audio into the mix, and the idea now became: when movement gets detected in a zone, that zone on the floor will get lit up.

One main problem with this is that anything that happens in sight of the camera within the designated area gets seen as a difference between the frames being compared. In other words, letting people see that motion has been detected, and not just hear it, caused the projector to light up an area, which caused the motion of changing the lighting to be detected. Not so good.

To get around this, I decided to just ignore it. Or to be more precise, the way my motion detection works, it’s only looking at a grayscale version of what the camera sees. In order to make the camera not see any changes, we had to pick colors that were all of similar tone or darkness. Once they were the same overall tone, the detection couldn’t see that anything had changed within it’s vision. We see colors, and so does the camera, but the motion detection was blind to those changes. Color blind, as it were.